轉文資料來源:http://stillshare.blogspot.tw/2011/12/renpy3-project.html

相當完整的教學網頁:http://stillshare.blogspot.tw/2011_12_01_archive.html

Ren'Py載點:

http://www.renpy.org/latest.html相當完整的教學網頁:http://stillshare.blogspot.tw/2011_12_01_archive.html

首先在建立一個New Project之前,可以先按option的選像

上半部Text Editor 跟 JEdit是改變編輯器用的 ,一般我們不會更動到這部分,因此可以先忽略,主要是下面的Projects Directory,這邊可以選擇新的project要建立在哪裡,或是從哪個資料夾讀取舊的project,隨個人喜好,若是以後建立新的project之後找不到該project的資料夾,可以打開這個選項看是不是放到其他地方去了。

接下來就可以開始用Ren'Py建立第一個project,上一次有提到,按下New Project之後,程式會要你輸入名字:

名字只要是英文的就可以隨便取,Project的名字跟之後project的內容不會有任何關聯,只要取一個自己認得的名字就好,我這邊先隨便取一個Demo然後按下enter,接下來隨便選一個Theme跟主題顏色,程式就會跳回來,只是左上角Project的名字變成剛剛所建立的那一個。

在這裡我們先看看project所在的資料夾,看有沒有多了甚麼東西,筆者這邊是跟Ren'Py的主程式同一個資料夾:

可以看到最左上角多了一個Demo的資料夾,Project的名字所影響到的就是資料夾的名字,其他執行視窗的標題都可以再調整,接下來我們點進Demo資料夾,看看裡面有甚麼東西:

有一個game的資料夾,跟一個README.html,README.html就是使用手冊,之後可以按照個人需要修改,之後跟著遊戲一起發佈出去,甚麼都不改也是可以的,官方自己作的範本已經很詳細,只不過是英文版的。接下來我們點進game的資料夾看看:

這些檔案是甚麼呢?.rpy的都是腳本檔,其他同名但副檔名不同的是JEdit讀取該腳本檔會用到的額外資料,以後如果要更新的話只需要更新.rpy檔就可以了,接下來我們回到Ren'Py的主程式:

這邊先點點看Launch,看看Ren'Py最基本就有些甚麼功能:

按了之後會發現新跳出一個視窗,馬上來看看,一開始的顏色跟按鈕樣式會因為大家之前選的主題及配色不同,不過看到的按鈕都會是這五個,Quit應該不用介紹,就是離開遊戲,Help會直接打開之前提到的README.html,是的,Ren'Py可以直接呼叫瀏覽器打開網頁檔案或是網址,這點krkr也可以做到,Nscripter就不行了。

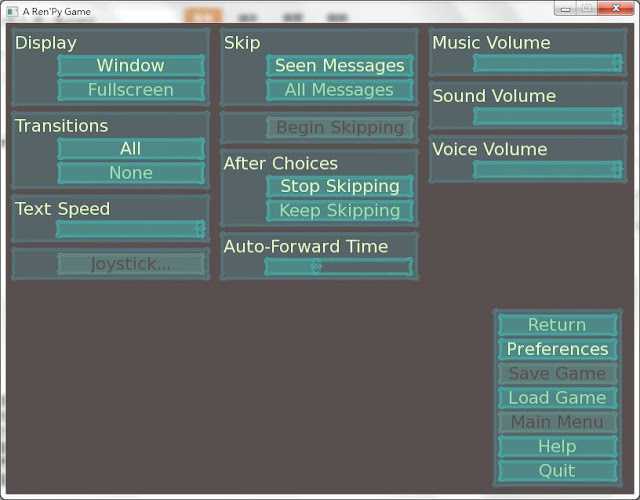

這裡我們先看看Preference,也就是所謂的config,用詞不太一樣,不過之後都可以藉著修改腳本檔調整:

噹噹~~功能齊全的cofig,右下角還有許多捷徑,其中After Choices是說在skip模式之下如果遇到分支選項,選擇之後要繼續skip還是停止,Transitions就是遊戲一些換場景的特效要開還是關,目前這邊先看看就好,接下來我們來看看Load畫面,可以直接點右下角的Load Game就好,Save Game按鈕還是黑的是因為我們根本還沒開啟遊戲:

很陽春的畫面,不過功能已經很齊全了,Ren'Py有Auto Save的功能,檔案都會放到Auto的頁面裡,Quick是Quick Save的功能,其它應該不用多說了,接下來我們按Return回到開頭按下Start Game,來看看預設的遊戲畫面:

後面的格子狀是什麼?我想有用過繪圖軟體的應該都知道,就是什麼都沒有的意思,這邊請注意一下對話框右下角:

這個是遊戲一開始就幫你做好的快捷按鈕,Auto代表自動前進,Q.Save就是quick save,Q.Load就是Quick Load,所以基本上即使你對程式一竅不通,使用Ren'Py還是很方便的。

接下來我來介紹一下滑鼠按鈕的功用,滑鼠左鍵就是下一句,滑鼠中間的鍵就是隱藏對話框看背景(很奇特的設計,滑鼠只有兩個鍵的很抱歉無法使用此功能),滑鼠右鍵會直接跳到Save畫面:

隨便點一個格子Save吧~

儲存格會有遊戲截圖、遊戲儲存時間,至於遊戲對話擷取很遺憾我目前找不到實現的方式,這點krkr可以比較容易實現,除此之外,Ren'Py看上一句話並不像krkr會出現回想模式的文字記綠,而是讓整個遊戲回到上一句話的動作,包含音效以及特效都可以再看一次,這點以後有長一點的對話之後再自己試試就知道了。

接下來因為這個遊戲目前甚麼都沒有,所以可以先關掉,回到Ren'Py主程式,是時候來看看JEdit了!那麼就來按按看Edit Script吧,因為是Java程式,所以第一次執行的時候都要先等一小段時間,可別一急之下就按了很多次,這樣會跑出很多JEdit視窗~

很先進的編輯器,而且色彩很豐富,這邊先說明一個基本且最重要的概念,那就是在Ren'Py的程式裡,一句話最前面的間隔是很重要的,大家可以看label start:下面的那兩句,空行並不重要,但是大家可以發現e"... ..."是從第二個字開始的,前面的空白格不可以去掉,因為Ren'Py的程式會依照每一句的起始點來判斷這些句子是否屬於同一個區塊,這點在下次詳細介紹語法的時候會再說明一次,目前大家只要知道開出來是長這個樣子就好了。

那麼最後還得處理一個很棘手的問題,那就是...中文,畢竟這邊是台灣,沒用中文怎麼可以呢?但大家可以把裡面e"... ..."中間的英文字直接打中文試試,會發現根本無法顯示,因為Ren'Py本身並沒有附贈中文字型,當然顯示不出來啦。

那麼怎麼辦,只好做一個全英文遊戲了嘛!?當然不是,首先我們要找一個中文字型檔(副檔名.ttf),隨便找一個自己喜歡的丟進Demo下面的game資料夾裡,我這裡是找MSJHBD.TTF

那是什麼字型我忘了,總之重點它是繁體字型,接下來回到JEdit,按下options.rpy的分頁,第148行以及第152行:

改成下面這樣:

記住我前面說過的話,要注意這行指令前面的空白間距,絕對不可以多一格或少一格,不然程式都會報錯,在JEdit裡,ctrl+s就是save,ctrl+z就是恢復上一步,基本上跟word一樣,以及只要不關閉JEdit,你可以一直上一步到最初開啟的文件狀態,還滿方便的,完成之後重新在執行一次project吧~

喔耶~~ 中文出現了!!!

自己準備字型檔的好處是:Ren'Py不會管你現在的OS有沒有安裝繁中字體,即使你現在把整個程式拿去日文win執行,還是可以顯示出繁中,這點在unicode程式是非常重要的,也不需要讓不同語系玩家為了執行這個程式一直轉換語系。

自己準備字型檔的好處是:Ren'Py不會管你現在的OS有沒有安裝繁中字體,即使你現在把整個程式拿去日文win執行,還是可以顯示出繁中,這點在unicode程式是非常重要的,也不需要讓不同語系玩家為了執行這個程式一直轉換語系。

最後的最後,如果真的對英文沒轍怎麼辦呢?請到官網,http://renpy.org/wiki/renpy/doc/translations/Translations 這個頁面有很多語言,要怎麼用呢?

我們台灣當然是選繁體中文啦~

把裡面的程式碼copy起來,回到JEdit,按上面的file→New新增一個頁面:

然後儲存檔案放在跟腳本同一個資料夾,檔名叫做translations.rpy:

回到options.rpy分頁,加上一行句子如下:

都完成之後我們回去在執行一次project看看變甚麼樣子吧:

都變成中文啦!!! 就連確認方塊也是!!

如果對翻譯不滿意,可以直接開啟translations.rpy修改,如果是照官網的方法可以讓整個主程式都變成繁中版的,但我個人覺得這還是有點風險,畢竟語系上還是可能會出點問題,而且這樣所有英文按鈕也變成中文了,所以我比較喜歡每個project看有沒有需要再個別更新,畢竟有些按鈕保持英文也是不錯的,這週就先到這裡,下週就開始介紹腳本的script了